The Cloud Lab at the Columbia University Graduate School of Architecture, Planning & Preservation

An experimental lab that explores the design of our environment through emerging technologies in computing, interface and device culture.

The Lab | Projects & Events | Downloads | People

Interview, Hiroshi Ishiguro

A pioneer in life-like robotics talks about the near future of presence and communication.In April of 2010, the Cloud Lab visited the Asada Synergistic Intelligence Project, a part of the Japanese Science and Technology Agency’s ERATO project. In an anonymous meeting room surrounded by cubicles, we met with Hiroshi Ishiguro to talk about the future of robotics, space and communications.

Ishiguro, an innovator in robotics, is most famous for his Geminoid project, a robotic twin that he has constructed to mimic his every gesture and twitch. Dressed in a uniform of black, Ishiguro’s presentation is as matter-of-fact as his surroundings; robots will be everywhere in the future and he wants to make sure the future of communications is as human-centric as possible. Splitting his time between the leadership of the Socio-Syngeristic-Intelligence Group at Osaka University and his position as fellow at the Advanced Telecommunications Research Institute, his research interests are tele-presence, non-linguistic communication, embodied intelligence and cognition. He talked to us about the new human-like robotics he is developing, the importance of random in learning and what our role should be as the agents of a new “evolution”.

[Ishiguro began with a presentation of his work, entitled “Social Responsibility”.]"We are not replacing humans with machines, but we are learning about the human by making these machines. Robots reflect and explore that human society."

- Horoshi Ishiguro

This was my dream. I wanted to build this kind of robot society. The information society that will building these robots, for that society this is quite a natural direction. Today we don’t see this kind of humanoid robot in a city, but we see many machines - for instance the vending machines found in the Japanese rail systen. The vending machine is talking, saying hello. Its impossible to stop the advancement of this kind of technology, that is human history. The robots we develop always find their source in the human. We walk, so locomotion technologies are important. We manipulate things with our hands, so manipulation is important. We are not replacing humans with machines, but we are learning about the human by making these machines. Robots reflect and explore that human society.

[Ishiguro then led us through the field testing of an early robot prototype, which was deployed in malls to guide visitors. This robot is now commercially available and in wide-spread use in Japan. He then discussed his Geminoid project, an android robot that was developed to model not only his physical appearance, but the series of subconscious gestures and “behaviors” that are mostly unexamined by the field of robotics.]If a robot has a human-like appearance, then people expect a human-like intelligence. The robot is a hybrid system, a mix of controlled and autonomous motion. For instance, the eye movement and movement of the shoulders is autonomic. We are always moving - a kind of unconscious movement. That kind of movement are automatic, but the conversation were having is dependent on these movements.At the same time, we can connect the voice to an operator on the Internet, so we can have a natural conversation. I can recognize this android as my own body, and others recognize it as me. But Others can adopt my body and learn to control it as well. This is a possibility for a tele-operated android - we can exist in a different place.

This is quite a futuristic scene, but these things will be happening soon. We left this android in a cafe for three weeks, and we watched peoples reaction. Half of the people ignore the android, they don’t realize whats happening. Another half come to the android and start to talk. Once we start to talk back, they can adapt and the communications is quite natural.

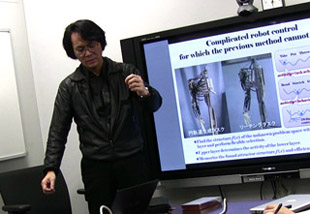

[Ishiguro discusses new paradigms for robotics and the new prototypes he is working with]

A robot is a very good tool for understanding the human. I should mention the process of development of the robots. These robots are so complicated we cannot use the traditional understanding [of robotics]. In order to realize a surrogate, or a more human like robot, we need other tools. For example, the Honda Asimo uses a very simple motor, which is essentially a rotary motor - its not human. The human is actually a [series] of linear actuators. With this kind of [actuator] we can make a more complex, more human robot.

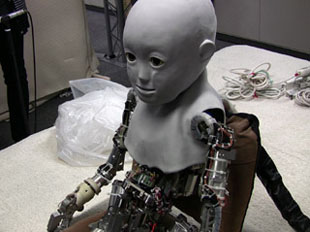

[Ishiguro shows us the CB2, a “soft” robot that is actively trained by a human “mother”. CB2 has pneumatic actuators, over 200 active sensors, including two cameras and microphones. It is autonomous, but largely a data-gathering mechanism for figuring out what actuators are important when manually training a robot. See his paper “CB2: A Child Robot with Biomimetic Body for Cognitive Developmental Robotics”]

In a traditional process, we would train or develop each part, and then put them together into an integrated robot. In this project, we train the entire system at the same time. If the robot has a very complicated body, it is difficult to properly control [and coordinate] all its movements. Therefore, the robot needs help by a care-giver, a mother - in this case my student. My student is teaching the robot how to stand up. This way we can understand which actuators are important for standing up.

[Ishiguro then goes on to explain an alternative way of creating behaviors, using the Japanese word Yuragi to describe noise-based systems and importance of random and biological fluctuation]

" In traditional engineering, the most important principle is how to suppress noise. The next system, or more intelligent or complex system, will figure out how to utilize noise, like a biological system."

We have a very big difference in the robotic and human system. For instance, the human brain only needs 1 watt of energy. Compare to the super computer - it will require 50 thousand watts. Why do we have this big difference? The reason is the noise the human brain makes - the brain makes good use of this noise [Yuragi, or biological fluctuation]. I can try to explain how the biological system uses noise, which is everywhere. At a molecular level everything is a gradient, but for the computer we are suppressing noise and expending energy - we are making the binary mistake. That system takes a lot of energy. In traditional engineering, the most important principle is how to suppress noise. The next system, or more intelligent or complex system, will figure out how to utilize noise, like a biological system. We are working with biologists, and we have developed this fundamental equation. We call this the yurangi formula, which means biological fluctuation. A [kinematic skeleton] is the traditional system. This is a model, based on the model we could control the robot. But actually, if we have a very complicated robot, if a robot moves in a dynamic environment - we cant develop a model for that environment. But if we watch a biological system, for instance insects or humans, we see a model that can respond to a dynamic world and control a complicated body. We don’t know how many muscles we have, yet we learn to use our bodies very well. We are using noise [to learn and adapt], for instance Brownian noise (though the biological system employs many different kinds of noise).

So we need to modify our models to incorporate noise. This creates a kind of balance-seeking, where noise and the model control together. If the model fails, then noise takes over. The robot doesn’t need to know how many legs it has, or how many sensors. It needs to start with random movements. Small random movements and large random movements. We can apply these same ideas to a more complicated robot. [We have developed] a robot with the same bone structure as a human and the muscle arrangement, and with such a complicated robot we cannot solve the inverse kinematics equations [that determine movement]. Instead we can control it through random movements. The robot can estimate the distance between its hand and a target. If the distance is long, the robot will begin to randomly move its arm around in large-scale noise pattern , across all actuators. Eventually it will find the target, and the noise will be suppressed. It does this without every knowing its own bone structure. We can relate this to the human baby. A baby has many random movements, it looks like a noise equation. Form that it develops a series of behaviors that allow it to control. Babies run these noise-based automatic behaviors. Employing this we can build a more human-like surrogate.

Interview: Minimal Body, Body Property

The Cloud Lab: As architects we are curious whether not the responsiveness - or the feeling of presence - is something that can be integrated into the architecture and space?

Ishiguru: [Robotics researchers] call it body propriety and it is quite important for everything. Appearance is also important. My next challenge is my next Geminoid: a female, and she will be better than [my current Geminoid]. Many people can accept [a female] Geminoid. Even if we use my appearance, all people can control this robot. There was a student in elementary school and she enjoyed very much controlling [the geminoid]. Its a mixture of personality [and body] - she has my body, so who is she actually? What I want to say is that she can adapt to this robot, but if we change the appearance of this robot, she may have an altogether different personality or a attitude.

TCL: So the behaviors of both the robot and the operator are related to [the robot’s] body properties?

Ishiguro: The human relationship is based on human appearance, basically we want to see beautiful woman, right? Appearance is very important for everything, that is the reason why i have stated the android project. Before, the robot researcher would only focus on how to move the robot and not design the appearance. Everyday you are checking your face [appearance] and not the behavior. They are very different.

TCL: What about the appearance of the robots you have [deployed] in the shopping mall?

Ishiguro: That was kind of a minimal design. We needed to design a very robust robot. Now we could put even more stuff - every year we are improving the design.

TCL: Do you think the robot can be emotive, resembling the human? Expressive, without having the physical character of the human being? for example, Ibo or Asimo (Sony)?

Ishiguro: Emotion, emoting, objective function, intelligence, or even consciousness is not the objective. I function, I'm subjective, [so] you believe I'm intelligent right?

Where is the function of consciousness or emotion? We believe by watching you're behavior that you have consciousness or emotion. She has a emotion and she believes I have emotion therefore we just believe that we have emotion and consciousness. Following from that, the robot can have emotion, because it can have eyes.

Do you have drama [in robotics]? I worked on the robot drama Hirata (check reference) We used to use the robots as actresses and actors in scenarios [with human actors]. The [human actors] don't need to have a human like mind. [The director’s] orders are very precise, like “move forty centimeters in four seconds”. But actually we can feel human emotions in the heart when watching this drama. I think that is the proper understanding of emotion, consciousness and even the heart.

TCL: Now that it is possible to grow tissues and organ for biological purposes how feasible is this to integrate with robotics?

Ishiguro: This is cyborg technology - as a robotic researcher I don't know whats happening with tissue engineering. [In the future] we will use artificial organs more, in such way we will repress our natural organism with an artificial organism. The most difficult part is the human brain.

Interview: Programming Bodies

TCL: We are talking about “programming bodies”, in the sense that the body is the last frontier of innovation. Despite the context of many technologies (for instance the rapid incorporation of cell phones for communications) extending the human body, the actual manipulation of the body itself remains taboo. There is a debate on the ethics of changing bodies - who do you identify with in this debate? To the engineer, philosopher, priest?

Ishiguro: In the Yuragi project, we are working with medical schools - they are interested in tissue engineering and they are trying to combine our technology with theirs. My next collaborator is with a philosopher, actually two post-docs from philosophy. I am trying to develop a model in social relationships. My main interest is the human mind and why emotional phenomenon appears in human society. I believe we can model human dynamics - we can not watch just one person in understanding emotion, right? We need to watch the whole society and we need to develop models of that society - that is very important. Today we don't have enough researches approaching the human model [in robotics], of trying to establish the relationship [between robots and human beings].

TCL: I know you have collaborated with Daito [from Rhizomatics].

Ishiguro: Artist and philosophers are very important collaborators in the next [robots]. Why art? All engineering is coming from art. Giving a methodology to art becomes engineering. To get more inspiration we must fundamentally becomes artists.

TCL: Do you think artists need to become more familiar with engineering?

Ishiguro: Artists need tools right? For painting you need a canvas and brush. If we can give new tools to the artist then they can develop something new.

TCL: In terms of working method, the laboratory is a very controlled space - but in field tests, for instance the robots you have deployed in malls, hospitals, large spaces, small spaces, what is the feedback that you are getting in terms of spaces that sponsor good interactions [between robots and humans].

Ishiguro: The real fundamentals come from the field, in interactive robots. We are getting a lot of feedback . In order to have this kind of system we need sensors. We cant just use the sensors from the robots. Is not enough to [compute and plan] out the necessary activities. We have developed our own sensor networks with camera/laser-scanners and our system is pretty robust. The importance of the teleoperation is through field testing. People ask the robot difficult questions and that is natural. Before that I developed some autonomous robots - but I gave up that [research direction] and focused on the teleoperation - which is good for collecting data. By teleoperation the robot can gather data on how people behave and react, then we can gather the information and make a truly autonomous robot.

TCL: Are there spaces robot seems to like more than others? For instance, the mall is a crowded place - does this pose problems?

Ishiguro: This is a real environment, this is the market and this our goal. Most importantly to make practical and useful systems.

TCL: Maybe I can twist the question, in the sense that the robot being at an airport or mall,

Ishiguro: [The robot] will not be too different. The airport is similar to the mall. The next target is the elderly care [and hospitals]. The [elderly] have a lot of free time where they can go to school and [engage in] crafts. We are thinking of how to support the elderly and the other target is the elementary school and education.

TCL: For instance our behavior is very different depending on the space, we operate different from space to space is it not something designed into the robot?

Ishiguro: That is why we develop the telecommunication. If we control the robot we control the situation, we can gather information and then we can develop more autonomous robots. We are in a gradual development process for the developing robot.

TCL: As the human is flexible, you see a very flexible robot that can be context sensitive.

Ishiguro: Right

TCL: When you design [the movements] of a robot. you said that you use random movement as a way of programming. Is this the way the physical arms works?

Ishiguro: In developing a robot, I always have a policy - the fundamental problem in each project. For example, in order to improve the [human and robot] interaction I have decided to build a female android, that people will want to talk to. The gemenoid is a tele-operated android, so I was developing how to control a complicated body. The arm is a bio-inspired robot - I made copy of the human arm. I always have beforehand a fundamental issue to test.

TCL: Is it a true copy of the physical arm?

Ishiguro: A copy is impossible, in its complete form. Human muscle is very difficult to make,

but in the sense of the complexity of the mechanics, we can make something similar.

TCL: In terms of its unconscious movements? Is that something that can be prescribed or simply more random movement?

Ishiguro: Both, but it is complicated. Sometimes its reactive, sometimes you can’t expect what happens next, right? It looks like a chaotic system. therefore we are studying chaotic systems.

Interview: On Evolution vs Development

Ishiguro: Evolutionary processes are important and should happen - but the evolution is given by the human. In my laboratory we are building a new robot, we are improving the robot. That is the evolution.

TCL: A robot most importantly needs to know how to react to humans. That means there is a finite set of conditions we are looking to satisfy - if you believe our behaviors are computable?

Ishiguro: Is evolution easy? I get your point but its difficult. How do you think of the computability of humans?

TCL: I think they are constantly evolving too.

Ishiguro: But the evolution is quite slow. The current evolution of humanity is done through technology. By creating new technologies we can evolve. We can evolve rapidly.

TCL: So this is the only way to evolve?

Ishiguro: So evolutionary processes, currently I'm not interested. I am interested in machine learning, developmental process - but evolutionary is much longer time period.

TCL: Parallel to evolution, the child robot you were showing us had to be physically trained to move by a trainer.

Ishiguro: That is development, not evolution.

TCL: ...but it is employing a certain kind of machine learning, so that as it is trained over time, it can perform these functions by itself. Do you not see that as a kind of evolution?

Ishiguro: That is development, evolution is different. For example, a robot would have to be designed through genes - that is evolution. But even for the developmental robot, you have to give a kind of gene, its program code.

TCL: Have you experimented with genetic programming?

Ishiguro: We are using genetic programming but only in the context of very simple creatures, such as insects. But our main purpose is to have a more human-like robot and we can not simulate the whole process of human evolution.

Interview: Sending Presence to Distant Places

TCL: How much feedback does the person operating the Geminoid receive? Can the geminoid gauge the comfort level of its operator? Do you anticipate using bio-feedback or other senses?

Ishiguro: We were surprised a little to find that there are very few people who can accept [the full sensory information of the Geminoid]. For example, a news caster operated the robot and she quickly adapted to the new environment. It was a surprise for us but at the same time, this is a communications technology, one of the possibilities for this kind of robot in a “surrogate” world. The telephone reached distant places, the TV broadcasts visual images to distant places, cell phones can connect you anytime anywhere. What is the next media? To send yourself to a distance place and exist there. I really think we want to have that kind of world.

TCL: How much does it cost to send your Geminoid over seas?

Ishiguro: Shipping costs? $50 thousand [US dollars].

TCL: Is it cheaper to invite you to come lecture [in person]?

Ishiguro: The next one will be cheaper, $10 thousand [USD]. We're making two of a newer version. One will be female, the other will be a minimal design. So my question is what is the minimal design of human? Does it need ears? Hair?

My current Geminoid has 55 actuators, the next will have only 10 actuators - the purpose is to reduce cost. The cost of my current Geminoid is about $600 thousand [USD], $1 million including the whole system. The next female will be less than $100 thousand, then museums can buy the robot. That is one active project, the other one is to create a smaller humanoid design. We want to work with a cellphone company. I don't like the current cellphone. Why are all cellphones square? I don't understand, the cell phone is talking. We need to give cell phones more human-like appearance.

TCL: Do you have a desire to speak through a female Geminoid?

Ishiguro: [with a grin] Its not my hobby [laughter] but if you want you can try.

TCL: In some sense, that is a powerful commercialization of the technology - you can try these different characters and bodies.

Ishiguro: Yes, right. I think there are many possibilities.

TCL: What do you think are the limits of the Geminoids? Technology is always extending our capabilities, but is the Geminoid extending us or are we still extending it?

Ishiguro: People typically expect the Geminoid to be able to manipulate something. Actually, the Geminoid is just for communications. Physically it is weak, its much more for communication. The actuators themselves are not powerful enough to manipulate much. The Geminoid is a surrogate, whereas the manipulation [of objects] can be accomplished with another mechanism.

TCL: Is the ultimate goal to replicate the human being, or to extend its capabilities?

Ishgiuro: Maybe both...

TCL: What is the limit case of the technology then? If you are no longer physically present in a space, your robot can do anything. Is this kind of freedom a goal?

Ishiguro: The goal [of our research] is ultimately to understand the human. On the other hand we can't stop technological development in the near future. We have to seriously consider how we should use this technology as a society. But my goal is still to really think through these issues of technology, which is still far behind the real understanding of the human body. At least for 20 or 30 years, before retiring.

TCL: So this is always a consideration. Technology itself has no evil or good, even though pure science can be a generator or a catalyst.

Ishiguro: My role as a scientist is to develop a new technology, a technology that can change the world. It can be used both ways. which becomes the “real” technology, beyond my roll in developing the technology

TCL: Is it important for the inventor to have a role in the decision?

Ishiguro: People expect that the economy will be supported by technological development. Before the cell phone or computing, people discussed whether it was a good thing or not, but finally we ignored that discussion and made the phone. The economy is growing and and ultimately we can't stop the technological evolution.

The human is quite egotistic, always the discussion is posed around the issues of the moral, social, the responsible. But this is always after the technological development - so we humans are not so clever or pro-active. We as a species are eager for money but I believe in the university and the values of education. I hope with these tools we can have a correct understanding of the technology.

Authors

Toru Hasegawa is co-director of Proxy and an adjunct assistant professor at the Columbia University Graduate School of Architecture There, Toru is a co-directs the GSAPP Cloud Lab.Mark Collins is co-director of Proxy and an adjunct assistant professor at the Columbia University Graduate School of Architecture. There, Mark is a co-directs the GSAPP Cloud Lab.

Media

The lab resides at the Graduate School of Architecture Planning & Preservation, Columbia University

400 Avery Hall 1172 Amsterdam Avenue New York, NY 10027

Contact us at inquiries @ thecloudlab.org

Copyright 2014